Phi-3: small but mighty

Phi-3 is a series of open AI models created by Microsoft, recognized for their exceptional capability and cost-effectiveness as small language models (SLMs). These models, including Phi-3-mini, have rapidly become popular due to their superior performance and affordability. They consistently outperform other models of similar and larger sizes in benchmarks evaluating language, reasoning, coding, and math. Let’s explore these models in detail.

Spark of an Idea

Last year, Ronen Eldan from Microsoft, while reading bedtime stories to his daughter, pondered how she learned words and made connections. This sparked the idea to train AI models using simple vocabulary, akin to that understood by a 4-year-old. This innovative approach resulted in a new class of small language models (SLMs) that are more accessible and efficient.

Evolution of Language Models

Large language models (LLMs) are powerful but require substantial computing resources. Microsoft, however, has developed SLMs that retain many of the capabilities of LLMs but are more compact and trained on smaller datasets.

Introducing Phi-3 Family

Today, Microsoft announced the Phi-3 family of SLMs, which are the most capable and cost-effective models of their kind. These models, including Phi-3-mini with 3.8 billion parameters, outperform larger models in various benchmarks. They are now available on platforms like Microsoft Azure AI Model Catalog, Hugging Face, and Ollama, as well as an NVIDIA NIM microservice.

Performance Overview

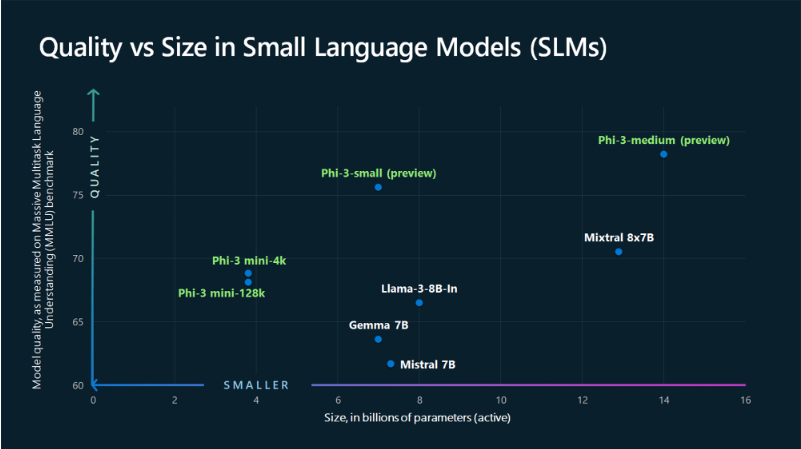

The plot illustrates the relationship between model size (in billions of parameters) and quality (as measured on the Massive Multitask Language Understanding, or MMLU, benchmark) for various small language models (SLMs).

Phi-3 Models Performance:

- Phi-3-mini-128k and Phi-3-mini-4k: Despite their smaller size (less than 4 billion parameters), these models achieve high quality scores around 70, outperforming many larger models.

- Phi-3-small: Currently in preview, this model shows a significant improvement in quality, scoring close to 75, while maintaining a compact size of about 7 billion parameters.

- Phi-3-medium: Also in preview, this model reaches the highest quality score of approximately 78 among the listed models, with a size of around 14 billion parameters.

Comparison with Other Models:

- Llama-3-8B-In: Positioned at around 8 billion parameters, it has a lower quality score compared to Phi-3 models of similar or smaller size.

- Gemma 7B and Mistral 7B: These models, each with 7 billion parameters, score below Phi-3 models in quality, indicating the superior performance of Phi-3 models.

- Mixtral 8x7B: Despite its large size (equivalent to 8 models each with 7 billion parameters), it doesn’t outperform the Phi-3-medium in quality.

Efficiency: Phi-3 models demonstrate remarkable efficiency, achieving high-quality results with fewer parameters compared to their counterparts.

Scalability: As the Phi-3 family scales up in size, from mini to medium, there is a consistent and significant improvement in model quality.

Innovation Impact: The Phi-3 family’s performance underscores the impact of innovative training approaches and data selection strategies employed by Microsoft, making these models highly capable and cost-effective.

Future Models

Additional models in the Phi-3 family, such as Phi-3-small (7 billion parameters) and Phi-3-medium (14 billion parameters), will be available soon. These SLMs are designed for simpler tasks, are easier to fine-tune, and more accessible for organizations with limited resources.

Customizing for Needs

Sonali Yadav, principal product manager for Generative AI at Microsoft, notes that customers can now choose models best suited to their needs, whether they require small or large models, or a combination of both. Luis Vargas, vice president of AI at Microsoft, emphasizes the advantages of SLMs for local applications, especially where quick responses or offline capabilities are crucial.

Edge and Industry Applications

SLMs also offer solutions for regulated industries needing high-quality results while keeping data on-premises. Vargas and Yadav highlight the potential for SLMs in mobile devices and other edge scenarios, enhancing privacy and reducing latency.

High-Quality Training Data

Microsoft’s breakthrough in SLMs was driven by focusing on high-quality training data, inspired by children’s stories. By using a selective dataset and generating millions of tiny stories, the researchers created SLMs that deliver exceptional results in a compact form.

Conclusion

In summary, Microsoft’s Phi-3 models demonstrate that small language models can achieve remarkable performance, making AI more accessible and versatile for a wide range of applications.